Godot Version

v4.2.1.stable.official [b09f793f5]

Question

How do you guys combine PBR materials to use in Godot?

So, I know this might seem obvious to experienced users (feel free to skip to the question), but I just started learning 3D modelling and I wanted to make a level of a cave for my first-person adventure exploration game. I’ve been studying tutorials non-stop (Blender tutorials, shading tutorials, Godot tutorials, etc.) and I was learning a lot. Despite feeling a little overwhelmed, I tried to follow a simple tutorial to make a cave (courtesy of Rob Tuytel from the Poly Haven team).

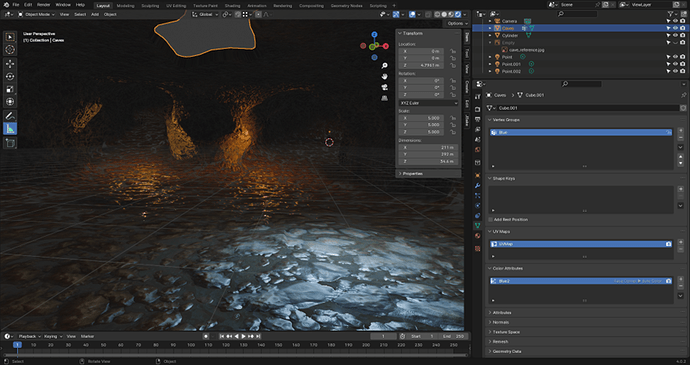

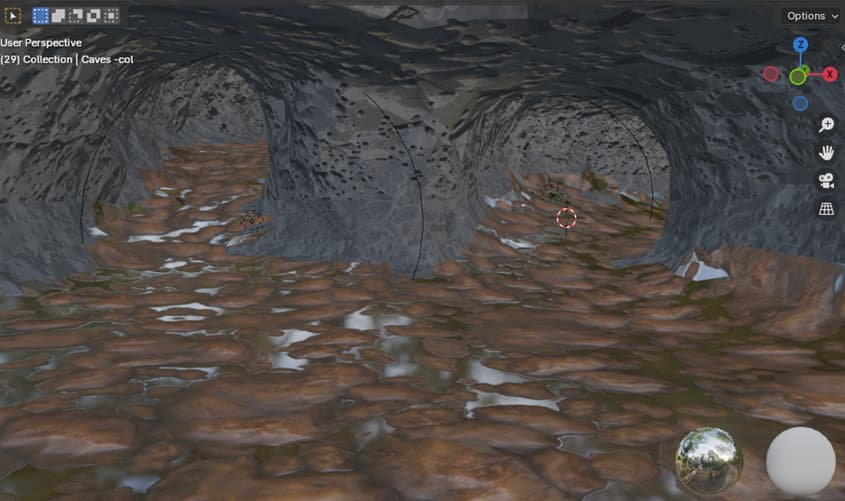

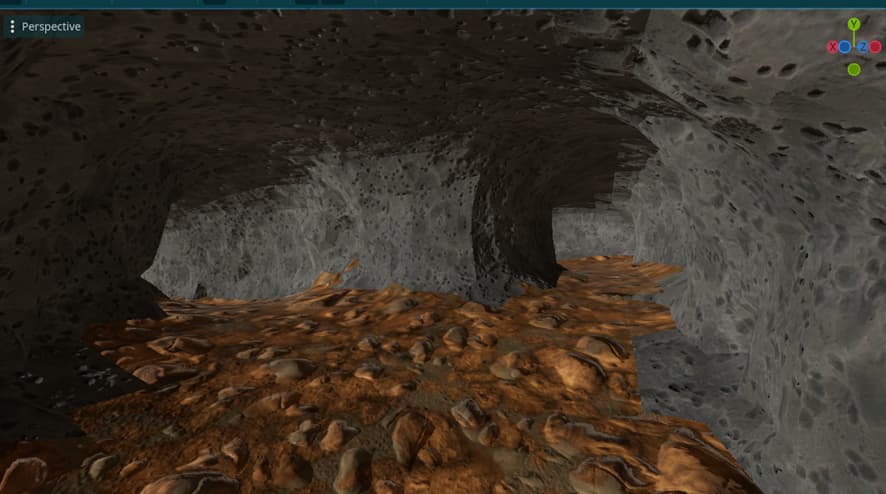

The result looked amazing and after a couple hours I was able to create something close enough in my blender project:

Notice how the walls are made of rough-looking rock, whereas the floor is wet stones. I thought it looked pretty nice, so I tried exporting to Godot… and that’s when the problems started.

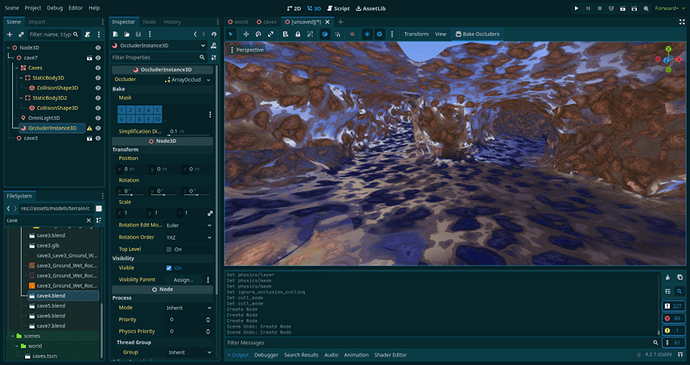

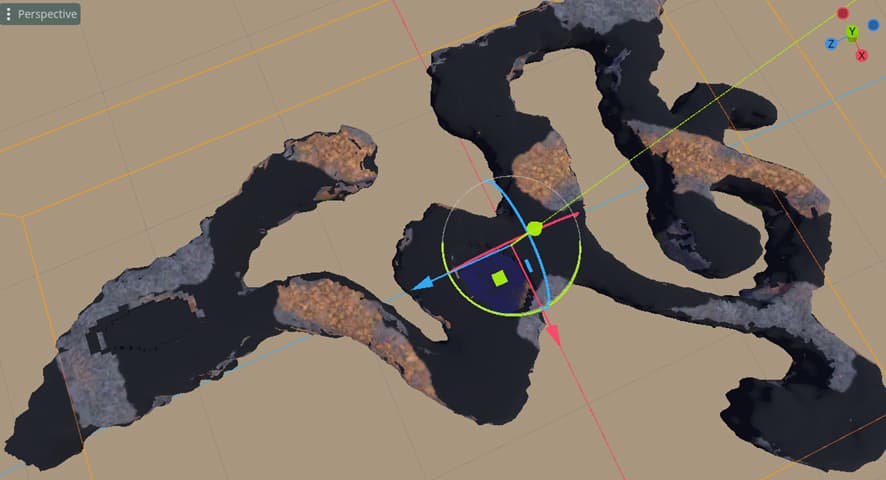

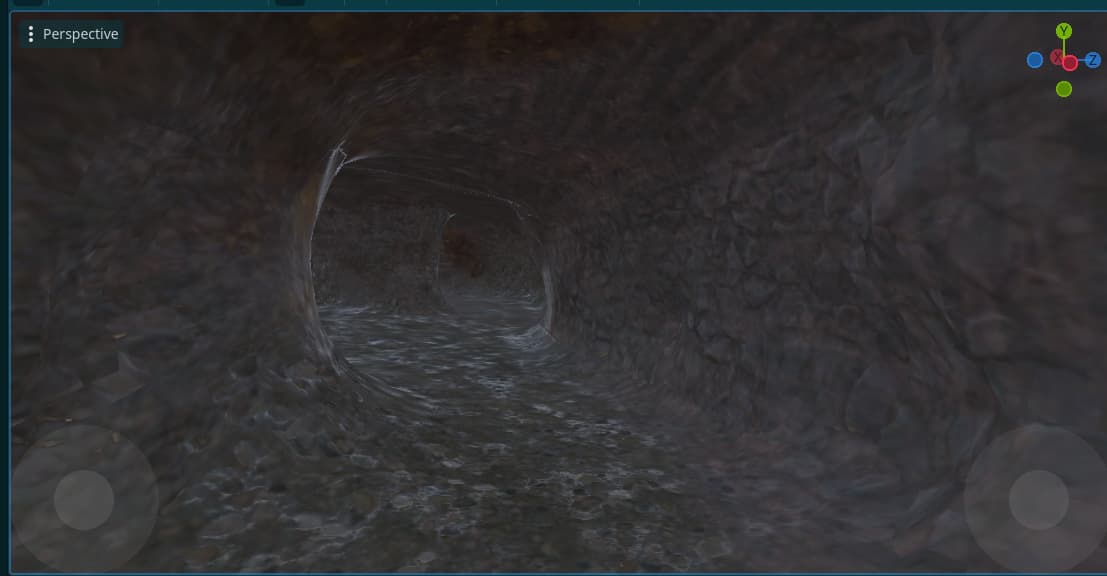

Uhm, what’s going on? (exported as gltf)

So, exporting materials from Blender to Godot has a few caveats. The tutorial I followed used vertex painting to achieve the seamless look between two different PBR Materials. However, upon exporting, only one of the two materials remains, and tinted blue from all of the vertex painting. I found a way to disable the blue paint showing up during export, but no way to keep both materials…

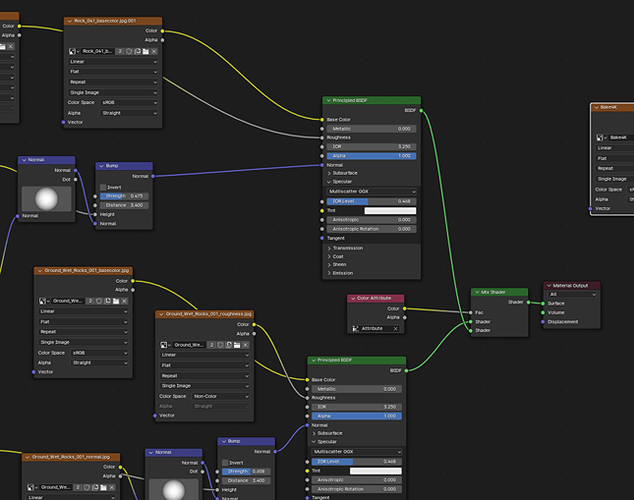

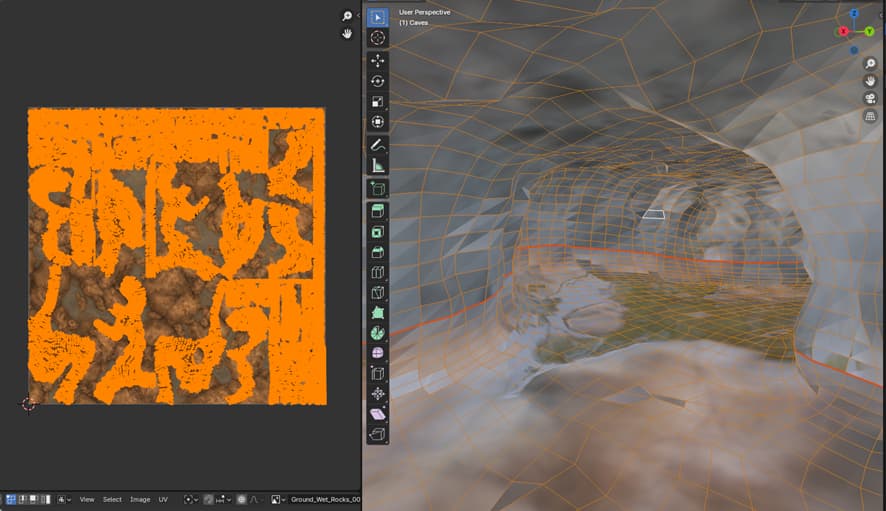

The shader node setup (two principled BSDFs for two materials that are later mixed using the vertex painting as a mask [the blue color attribute]).

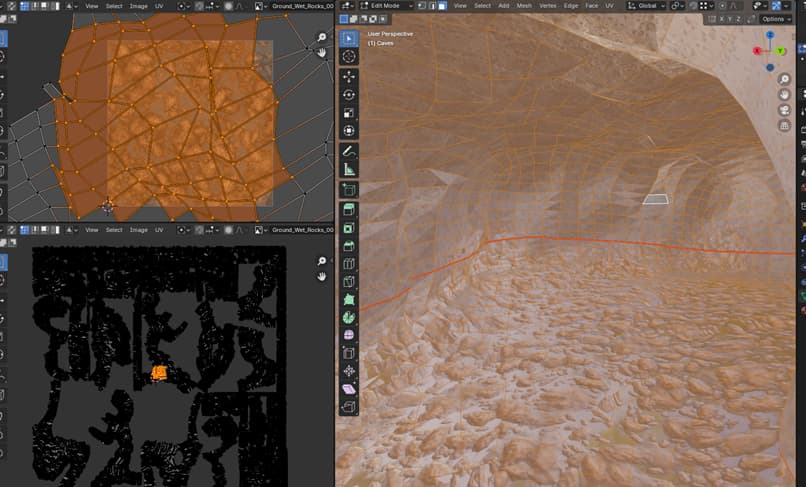

At this point I had the mostly seamless fusion of both textures I wanted in Blender (seen here on the right), but the UV scaling was too large. Compare top left (you can see the texture underneath the UV map) to the bottom left, where you can see the actual extent of the UV map:

The textures I was using in the materials were 1K resolution, but my mesh was waaay bigger than anything else I had seen in any tutorial. I had to scale the UVs 15x in order for the detail in the texture to be visible and not look stretched.

This didn’t seem like a problem at first until I encountered what is the most popular solution for exporting materials in a model: baking. I didn’t understand it at first, so I put some hours into learning to unwrap the UVs, marking the seams, using automatic projection, connecting the texture nodes in the shader, etc. Finally, I was ready to bake some cave systems…

As soon as I pressed BAKE, my computer froze and shut down. Apparently, the sheer amount of polygons ensured that what was being baked was my CPU. I had to tweak the settings a couple times, but it was by no means an intuitive process. The CPU continued to overheat a couple of times doing this, and my rig is by no means low-specs (AMD Ryzen 5 3600 + Nvidia RTX3060).

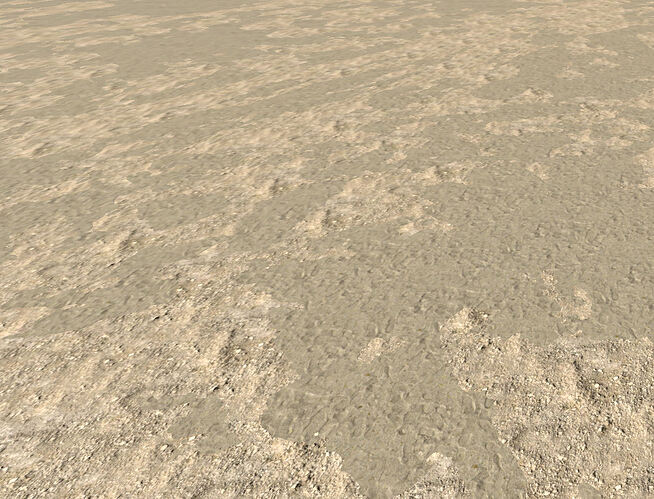

I tried several configurations and I was finally able to obtain a baked texture without my CPU shooting itself after a couple of hours thanks to using SimpleBake… Here was my new texture:

Uhmm… I think something’s not right.

Ok so this was probably my fault, and fixing the black spaces lead to this:

The UV map has been scaled down to fit in the 1k texture, but that leads to every rock and puddle being 15 times their intended size on the model surface, thus the stretched preview on the viewport.

At this point I had no idea what to do. I spent hours googling and looking for tutorials until I found someone on a forum mentioning that you can’t really do what I wanted (baking such small textures into huge models as repeating tiles), so I needed other solutions.

Most tutorials out there teach you to apply a texture to a mesh or surface and be done with it. If you want several textures in one object, assign them individually to each surface. I wanted a more organic look, but without more options I decided to try:

Blender (top) vs Godot (bottom) comparison.

Disregard the lighting since I made no attempt at making it similar in both editors, but do look at the seams between the two textures. I couldn’t find a way to implement the texture interpolation I had achieved before using the vertex painting mask, but I guess it works.

Interpolation between the textures, disregard the weird shading in the geometry.

And this is as far as I got. I am aware I’m just starting out and I have a lot to learn, including the limits of Blender and Godot, but I still need to ask… Is there any way to achieve the interpolation between the two PBR materials that I intended? There has to be, right? AAA games do it all the time. Maybe I need to use Adobe’s Substance 3D Painter? Or InstaMAT studio? Learn Godot shaders?