Here is the refactored code

PhsicsSynchronizer Code

class_name PhysicsSynchronizer

extends MultiplayerSynchronizer

@onready var sync_object : PhysicsBody3D = get_node(root_path)

@onready var body_state : PhysicsDirectBodyState3D = \

PhysicsServer3D.body_get_direct_state( sync_object.get_rid() )

@export var sync_pos : Vector3

@export var sync_lvel : Vector3

@export var sync_avel : Vector3

@export var sync_quat : Quaternion

@export var sync_frame : int = 0

var ring_buffer:RingBuffer = RingBuffer.new()

var last_frame = -1

var set_num = 0

enum {

ORIGIN,

LIN_VEL,

ANG_VEL,

QUAT, # the quaternion is used for an optimized rotation state

}

#func _ready():

#synchronized.connect(_on_synchronized)

func _exit_tree():

ring_buffer.free()

#copy state to array

func get_state(state : PhysicsDirectBodyState3D ):

sync_pos = state.transform.origin

sync_quat = state.transform.basis.get_rotation_quaternion()

sync_lvel = state.linear_velocity

sync_avel = state.angular_velocity

#copy array to state

func set_state(state : PhysicsDirectBodyState3D, data:Array ):

state.transform.origin = data[ORIGIN]

state.linear_velocity = data[LIN_VEL]

state.angular_velocity = data[ANG_VEL]

state.transform.basis = Basis(data[QUAT])

func get_physics_body_info():

# server copy for sync

get_state( body_state )

func set_physics_body_info():

# client rpc set from server

var data :Array = ring_buffer.remove()

while data.is_empty():

return

set_state( body_state, data )

func _physics_process(_delta):

if is_multiplayer_authority():

sync_frame += 1

get_physics_body_info()

else:

set_physics_body_info()

# make sure to wire the "synchronized" signal to this function

func _on_synchronized():

if is_previouse_frame():

return

ring_buffer.add([

sync_pos,

sync_lvel,

sync_avel,

sync_quat,

])

func is_previouse_frame() -> bool:

if sync_frame <= last_frame:

print("previous frame %d %d" % [sync_frame, last_frame] )

return true

else:

last_frame = sync_frame

return false

class RingBuffer extends Object:

const SAFETY:int = 1

const CAPACITY:int = 4 + SAFETY

var buf:Array[Array]

var head:int = 0

var tail:int = 0

func _init():

buf.resize(CAPACITY)

func add(frame:Array):

if _increment(head) == tail: # full

_comsume_extra()

if is_low():

_produce_extra(frame)

buf[head]=frame

head = _increment(head)

func _comsume_extra():

#print( "RingBuffer: consume_extra")

var next_index = _increment(tail)

buf[next_index] = _interpolate(buf[tail], buf[next_index],0.5)

tail = next_index

func _produce_extra(frame:Array):

#print("RingBuffer: produce_extra")

var first_frame = _interpolate(buf[tail],frame, 0.33) # assume only one frame exists tail should point at it

var second_frame = _interpolate(buf[tail],frame, 0.66) # assume only one frame exists tail should point at it

buf[head]=first_frame

head = _increment(head)

buf[head] = second_frame

head = _increment(head)

func _interpolate(from:Array, to:Array, percentage:float) -> Array:

var frame:Array = [

from[ORIGIN].lerp(to[ORIGIN], percentage),

from[LIN_VEL].lerp(to[LIN_VEL], percentage),

from[ANG_VEL].lerp(to[ANG_VEL], percentage),

from[QUAT].slerp(to[QUAT], percentage)

]

return frame

func _increment(index:int)->int:

index += 1

if index == CAPACITY: # avoid modulus

index = 0

return index

func remove() -> Array:

var frame : Array = buf[tail]

if is_empty() or is_low():

frame = []

else:

tail = _increment(tail)

return frame

func is_empty() -> bool:

return tail == head

func is_low() -> bool:

return _increment(tail) == head

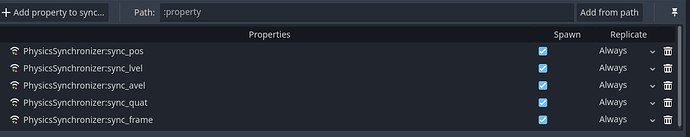

Make sure to connect synchonized signal to _on_synchronized

and add the following props to the replication config.

It removes “the correct_error” function that was never great to begin with and adds a ring buffer to manage frames from the server.

it can be tweeked but it is recommended that it stay at 4+1 at a minimum. This is because I tried to make it lock-free by keeping a space between the head and the tail. (this was never tested)

some ring buffer features:

- if the server packets are coming in too fast we will consume the frames faster to catch up, by interpolating two frames at the tail and dropping the most oldest frame.

- if the server is going to slow we will “stretch” two known frames into 4. if we only have one frame in buffer and a new frame coming in, we interpolate two extra frames between the tail and the newest frame. one at 33% and one 66%, with the newest frame being at 100%. (this should be tweaked depending on the buffer size)

this is all to maintain a healthy amount of frames without falling behind or getting ahead, but could be changed based on needs.

I profiled the change and could not detect a meaningful change +/- < 1% .

There are still some jump occurances now and then but overall it is better.