I’ve been mulling this over (more interesting than my job today) and I think I might have a plan of attack. It’s hacky and unproven, but here I go!

First, each line 2D is the child of a back buffer copy set to the rect of the screen/viewport. I think this will give you the line as a texture on transparency that you can work with in the shader. It has the advantage of every line 2D being normalized to the same size. (i.e. a given UV is in the same location on every line)

Next, you set up what I’m going to call your density map. This is a texture that stores how many lines are present at a given pixel, and it’s where this idea gets a a bit silly. You create a viewport with the same pixel dimensions as your screen space (or whatever you defined in your back buffer copy) and for every line in your project you create a corresponding line in the viewport. Basically you are mirroring everything from your main scene here, so it’ll need to update accordingly.

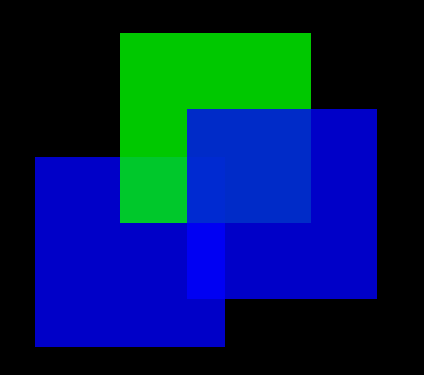

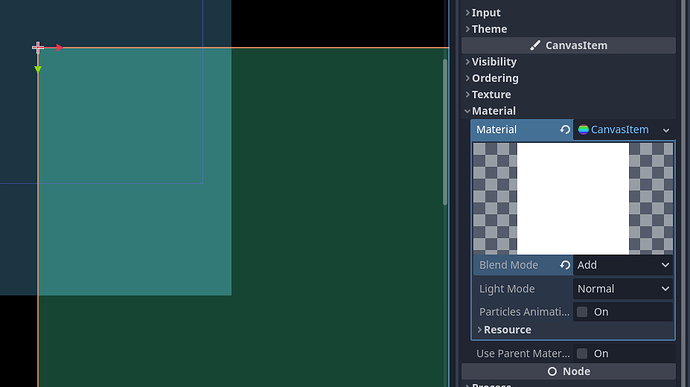

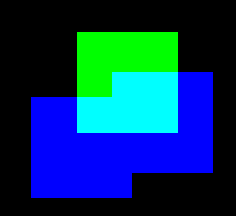

You set the color of all of those lines to a very dark gray, something like Color(1/256,1/256,1/256) and give them an “add” canvas item shader. This will result in a texture that gets lighter where there are more lines and darker where there are fewer. If there are no lines the color should be (0,0,0) if there is one line (1/256, 1/256, 1/256), two lines would be (2/256, 2/256, 2/256) and so on.

Alright, you send that viewport texture to a uniform sampler 2D on each line in your real scene. You can do this via script using material.set_shader_parameter(). Not sure if you have to send it each frame, and not sure if tree order will affect this… but now you have the data you need.

Each back buffer copy will need to use the “add” render mode. Each fragment will look something like this:

void fragment() {

COLOR.a = 1.0/(texture(density_map, UV).a*256.0);

}

Which should drop the alpha to (1/number of lines found at a given pixel) then it’s adding them all together. Functionally you are averaging… just reversing the order of operations: dividing first then finding the sum.

Ooft. That was an essay! and a fun thought experiment. Seems like a lot of work, I wish there were more render modes!!! Again, totes unproven, but might work ¯_(ツ)_/¯.

PS. Because you are using the add mode in the shader, too, you might want to build all your real lines in a viewport. Then you can lay the whole viewport over your background without having to worry about it being weird with the render mode.